Diamonds or DAll-E? The Luxury Dilemma

Why I deliberately ignored the best model to save a luxury marketplace from AI fakes.

Welcome back, AI Explorers!

In the world of machine learning, we often get obsessed with a single number: Accuracy. We ask:

How often is the model right?

But when you are building for the real world, especially where money and trust are on the line, being RIGHT most of the time isn’t enough. Sometimes, how you are wrong matters just as much.

In my latest project, I tackled a modern problem: distinguishing genuine photography from AI simulations. The specific use case? A high-end luxury jewelry marketplace.

Users upload photos of their expensive pieces: diamond rings, vintage watches, and rare gems. They sell on the platform. But there is a growing problem: Generative AI tools like MidJourney and DALL-E are getting frighteningly good at rendering photorealistic jewelry that doesn’t exist.

My mission was to build an AI guardrail that could look at an uploaded image and instantly flag if it was Real (1) or AI-Generated (0).

The Luxury Dilemma

When you are dealing with items worth $5,000 or more, the platform’s reputation is everything. In this scenario, my model faced two very different types of failure:

The Profit Risk (False Negative): The AI mistakes a real photo of a user’s diamond ring for a fake. The user gets blocked, gets annoyed, and we lose a potential commission. This is bad, but it’s a manageable loss of profit.

The Reputation Risk (False Positive): The AI mistakes a scammer’s AI-generated image for a real photo. The listing goes live. A customer pays a fortune for a phantom product. When the item turns out to be fake (or never arrives), the platform’s credibility is destroyed.

If I had optimized my model only for Accuracy, it might have performed well overall. But if it let even a handful of AI fakes slip through because it was trying to be generally correct, the business would be in serious trouble.

Enter Precision

This is why I focused heavily on Precision. I like to call it here as the Reputation Metric.

Precision answers a specific, critical question for this jewelry platform:

Out of all the listings my model approved as real, how many were actually real?

The formula is simple:

True Positive: The model said Real Jewelry, and it was real. (Safe!)

False Positive: The model said Real Jewelry, but it was AI. (Danger!)

A high Precision score means the model acts like a strict gatekeeper. It prioritizes eliminating False Positives to protect the platform’s reputation, even if that means being a bit too harsh on some real listings.

How I Implemented It

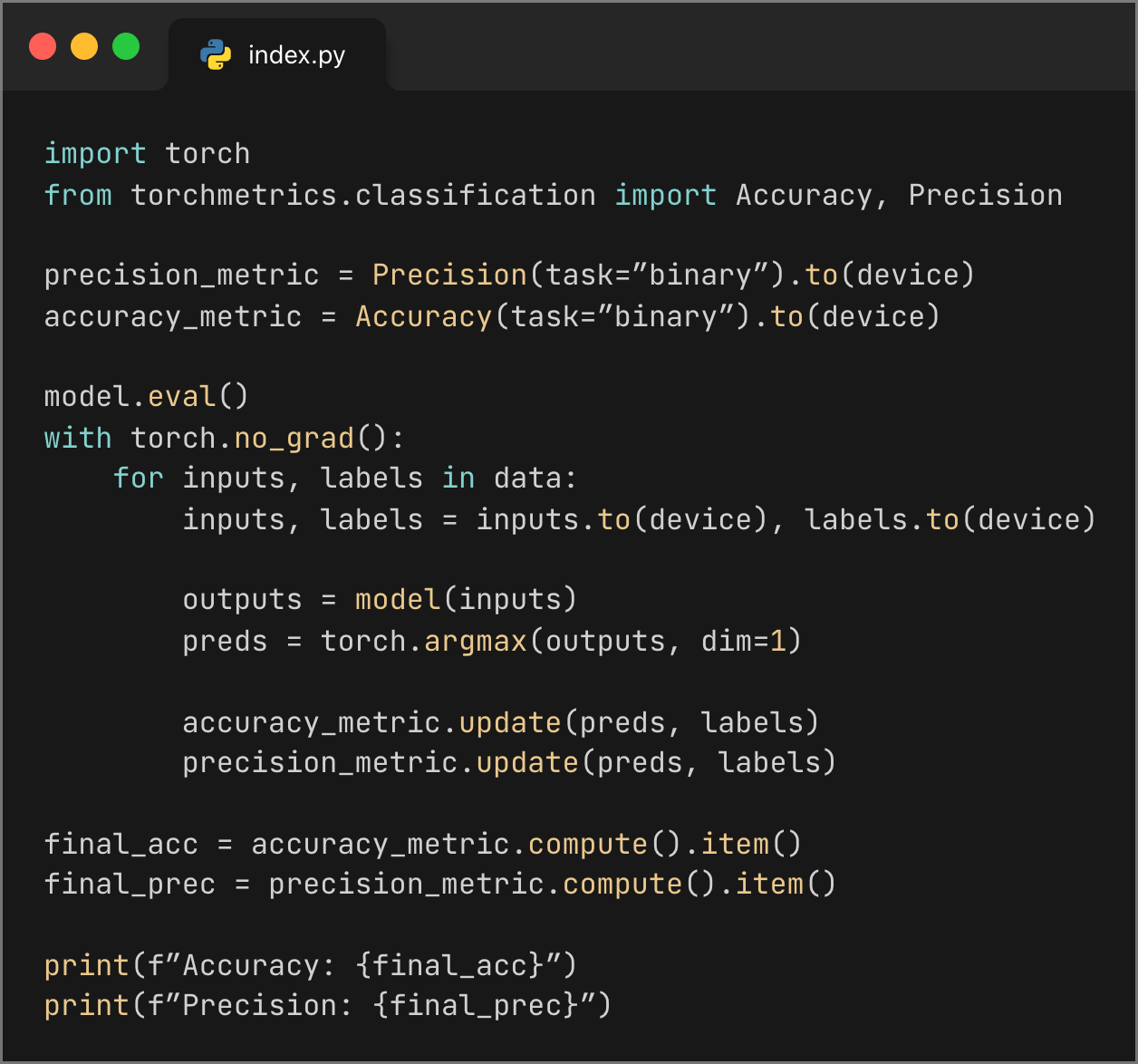

In PyTorch, we don’t need to calculate this manually. I used the torchmetrics library to track this alongside accuracy during the validation loops.

Here is a snippet from my code showing how I tracked this metric:

Python code:

The Takeaway

When I ran my hyperparameter search (using Optuna), I found that the models with the highest Accuracy didn’t always have the highest Precision.

If I were building a casual photo-sharing app, I might have picked the most accurate model. But for a luxury jewelry marketplace where a single fake listing can ruin the brand? I chose the model with the highest Precision.

The lesson:

Before you deploy a model, look beyond the accuracy score. Ask yourself: What is the cost of being wrong? If the cost is your reputation, make Precision your priority.

I’d love to hear your thoughts in the comments! If there’s a specific AI topic you’re curious about, let me know. I’d be happy to cover it in a future post.

References

https://docs.pytorch.org/ignite/generated/ignite.metrics.precision.Precision.html